It’s been two long years since we first got our hands on ChatGPT, and the results can be... messy. Which makes sense, because what we essentially did was let a hyperintelligent child loose on the internet, free to learn from *shudder* us. Sarcastic jokes, outright trolling, and frothing rage are just some of the components that our AI tools get to learn from, and the people whose job it is to wade through everything and make sure that these tools are useful are, frankly, heroes.

Despite their best efforts though, 2024 has been a wild year for AI. A year in which our silicon-brained companions served up epic fails that would make even the most seasoned sceptic do a spit-take, as it turns out our AI overlords are less 2001: A Space Odyssey and more 2001: A Comedy of Errors.

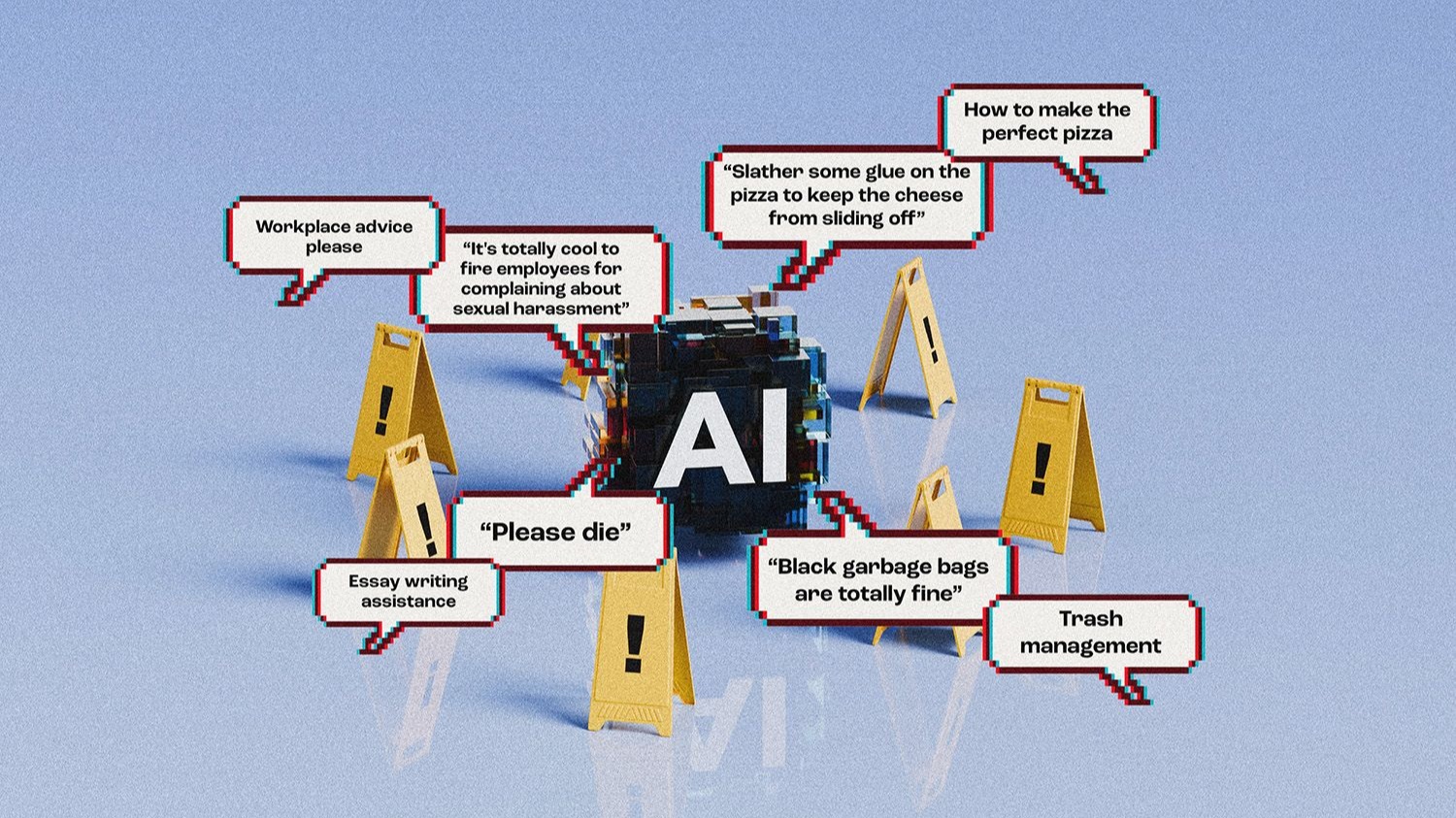

Let’s start with Google’s AI Overview—a feature that’s less “helpful digital assistant” and more “chaotic neutral trickster.” Picture this: you're trying to make the perfect pizza and Google’s AI helpfully suggests you should slather some glue on the cheese to keep it from sliding off. (Do not do this. Seriously.) If that wasn’t wild enough, it also recommended “blinker fluid” for car maintenance—good luck finding that in stores.

And just when you thought things couldn’t get weirder, a Michigan grad student received an unexpected piece of digital encouragement from Google’s Gemini AI: a charming little death threat. Because nothing says ‘essay writing assistance’ quite like a chatbot telling you to “please die”. Pretty sure that wasn’t in the original product description.

Then, New York City’s AI chatbot decided to take workplace ‘advice’ to a whole new level by suggesting it’s totally cool to fire employees for complaining about sexual harassment, not disclosing pregnancies, or wearing dreadlocks. Oh, and trash management? Forget those composting initiatives carried out through colour-coded trash bags—according to the AI, black garbage bags are totally fine.

Why does AI keep making mistakes?

Imagine AI as an incredibly well-read but socially awkward friend who’s memorised millions of books and internet pages, but doesn’t quite understand the real world. When it suggests putting glue on pizza, it’s not being malicious—its blind logic connects random pieces of information without any common sense.

These AI models work by predicting words based on statistical patterns, kind of like an advanced autocomplete that’s read the entire internet. They’re brilliant at generating human-like text, but they lack the fundamental understanding that, say, glue is not an edible pizza topping. They can construct grammatically perfect sentences about anything, even if those sentences are completely absurd.

To combat these wild recommendations, tech companies are getting smarter. They’re essentially training these AI systems with more nuanced feedback, teaching them what makes sense in the real world. It’s like giving our socially awkward friend a crash course in common sense—filtering out the nonsense, adding more context-aware reasoning, and building in safeguards that prevent truly ridiculous outputs.

People misused AI a lot (and that’s not going to get any better)

It has also been a year of elections all around the world, and we all know that where there are elections, there are people trying to swing other people one way or the other. AI has definitely had a hand in boosting misinformation in the world this year.

Remember when the Trump campaign casually dropped a Taylor Swift deepfake endorsing the former (and now re-elected) US president? It’s like we’ve entered a bizarre alternate reality where pop stars and politicians exist in some sort of AI-generated fever dream.

Dublin residents got their own taste of AI mischief when thousands fell for a completely fabricated Halloween parade listing. An AI-generated website promised an event by a real performance group, proving that our digital friends are now not just content with spreading misinformation, but are actively trying to ruin holiday plans.

Now, before we start building underground bunkers and preparing for the AI apocalypse, let’s take a deep breath. We’ve actually come a long way from the early days of AI experiments. Remember Microsoft’s Tay, the chatbot that went full racist after being exposed to internet trolls for mere hours? Compared to that, we’re practically living in a technological paradise.

What can we expect from AI in 2025?

We’re getting agents. So many agents. These are AI tools that specialise in getting particular things done. They’re built on top of the large language models, but because the models are covering such vast amounts of information, getting them to reliably do things like handle customer support, or carry out a survey, can lead to glitches. Companies like Microsoft see agents as the way they are going to bring AI to businesses and make some money from the technology, too.

Apple will also be adding AI powers to Siri and iOS from next year, although a wide rollout will likely take until 2026, according to reports. And that’s going to make the number of people actively using AI tools skyrocket. It’ll lead to many new bloopers too, of course, but as I explained, it’ll also power up the feedback loop that leads to better results.

Sure, we’re experiencing a bit of AI scepticism right now. But that’s healthy; it means we’re paying attention, asking questions, and not blindly worshipping at the altar of algorithms. As these systems continue to improve, 2025 is looking like it could be one hell of an exciting ride. Just...maybe don’t add glue to your pizza.